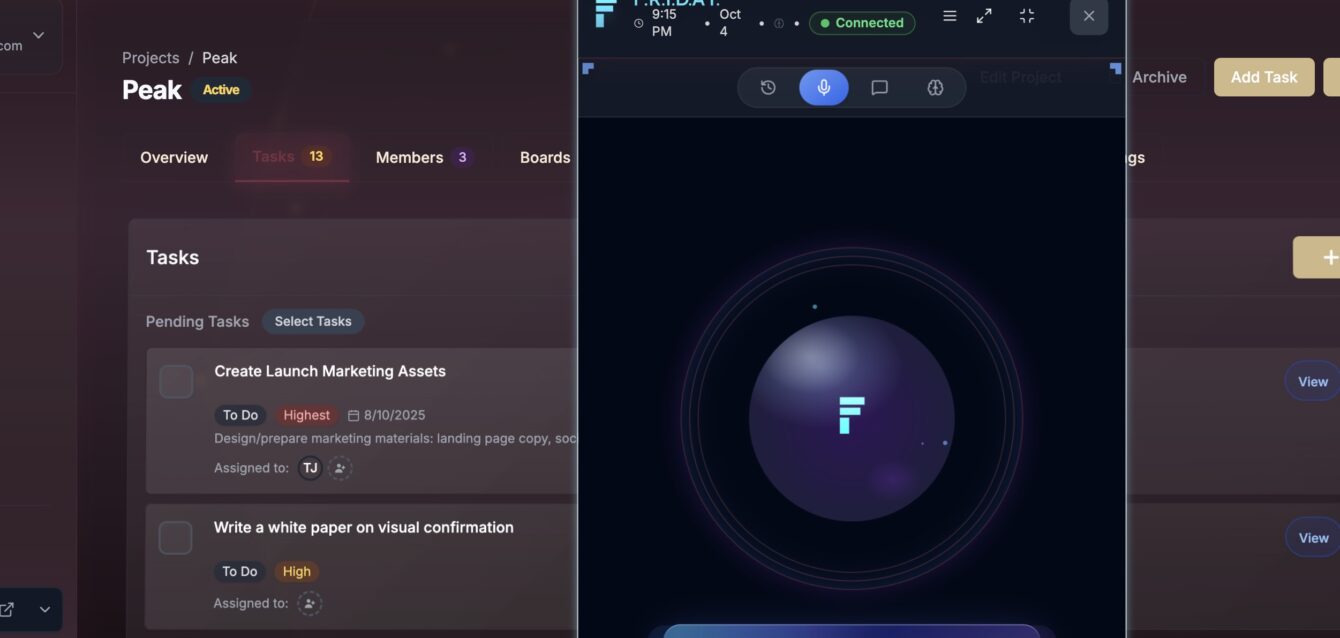

Visual Confirmation:

The Missing Piece of Voice AI

How one fundamental breakthrough transformed voice-powered productivity from promising concept to production reality—and why seeing is believing when it comes to AI confidence.

There's a moment every product builder dreams about—the instant when everything clicks, when a seemingly impossible problem reveals its elegant solution. For me, that moment came not in a breakthrough algorithm or novel architecture, but in something far more fundamental: the realization that people need to see AI working, not just hear it confirm.

The Black Box Problem

When we first built F.R.I.D.A.Y. (our AI assistant for Peak), the technology was impressive. Natural language understanding, context retention across sessions, sophisticated task management—all the pieces were there. Users could say "Create a project for Q1 strategy with high priority" and F.R.I.D.A.Y. would execute flawlessly.

The AI worked. The users didn't trust it.

The pattern was consistent across early testing: Users would issue a command, F.R.I.D.A.Y. would confirm verbally ("Created project Q1 Strategy with high priority"), and then users would immediately navigate to the projects page to verify it actually happened. Every. Single. Time.

We had built an invisible assistant in a world that demands visibility. Voice confirmation alone—no matter how sophisticated—couldn't overcome decades of user conditioning that to trust something, you must see it.

The Discovery

The breakthrough came from obsessing over a simple question: What if F.R.I.D.A.Y. didn't just tell you she completed a task—what if she showed you?

Not in a summary screen. Not in a notification. But in real-time, the exact moment the command was executed, with the interface updating immediately to reflect the change.

The Insight: Every action F.R.I.D.A.Y. takes needs a visual counterpart that happens simultaneously with her voice response. The GUI isn't just for manual interaction—it's the confidence layer that makes voice AI believable.

This wasn't about adding animations or flourishes. This was about fundamentally rethinking the relationship between voice AI and visual interfaces. They needed to be one seamless system, not two parallel experiences.

The Four Pillars of Visual Confirmation

Through hundreds of iterations and countless user tests, we distilled visual confirmation down to four core principles. These aren't technical specifications—they're fundamental truths about how humans build confidence in AI systems.

-

Immediacy: Trust Happens in Milliseconds

The visual response must occur within the same cognitive moment as the voice command. Any delay—even a second—breaks the connection and introduces doubt. When you say "Create task for code review," you should see that task appear before F.R.I.D.A.Y. finishes her verbal confirmation. The visual change isn't a follow-up; it's simultaneous proof.

-

Specificity: Show the Exact Change

Generic feedback destroys confidence. If F.R.I.D.A.Y. creates a project, the user should see that specific project appear—with the right name, the right status, in the right location. Vague "success" indicators feel like placeholders. Specific, accurate visual changes feel like magic.

-

Context: Navigate to Where It Matters

Visual confirmation isn't just about showing a change—it's about showing it in the right context. Create a task in the Playbook project? The interface should navigate to Playbook's task board and highlight the new task. The user shouldn't have to hunt for the result. The result should present itself.

-

Consistency: Every Action, Every Time

Confidence is built through reliability. Visual confirmation can't be a sometimes feature—it must be a fundamental contract. Every action F.R.I.D.A.Y. takes produces a corresponding visual update. No exceptions. When users know they'll always see confirmation, they stop needing to verify manually.

The Confidence Transformation

The impact of visual confirmation went far beyond user satisfaction metrics (though those skyrocketed). It fundamentally changed how people interacted with F.R.I.D.A.Y.

From Skeptic to Power User

One early tester, a project manager we'll call Sarah, initially refused to trust voice commands. "I need to see my work," she insisted. After experiencing visual confirmation, her behavior shifted dramatically. Within a week, she was issuing complex multi-step commands ("Create three high-priority tasks for the Q1 campaign and navigate to the timeline view") with complete confidence. The visual feedback loop had eliminated her skepticism entirely.

"I'm not watching F.R.I.D.A.Y. work anymore," Sarah told us in a follow-up interview. "I'm just working, and she's keeping up. The interface shows me everything I need to know without me having to ask."

Why Visual Confirmation is Non-Negotiable for Voice AI

As AI assistants become more sophisticated, the gap between what they can do and what users trust them to do becomes the primary adoption barrier. Voice confirmation alone cannot bridge this gap because it violates a fundamental principle of human-computer interaction: seeing is believing.

Humans have spent decades learning that computers do exactly what we tell them—no more, no less. AI breaks this contract by interpreting intent. Visual confirmation rebuilds trust by showing users that the AI's interpretation matches their intention. It's not about doubting the AI's capability; it's about confirming alignment.

Effective human-AI collaboration requires tight feedback loops. When a user issues a command and sees immediate visual confirmation, they receive instant validation of both the command's execution and their own effectiveness at communicating with AI. This accelerates learning and builds confidence exponentially faster than voice-only systems.

Visual confirmation doesn't just help skeptics—it makes voice AI accessible to users with different cognitive styles and accessibility needs. Some users process visual information faster than auditory; others benefit from having both channels reinforce the same message. Universal design isn't just ethical—it's essential for adoption.

The Ripple Effects

Once users trust visual confirmation, something remarkable happens: they start pushing the boundaries of what they ask the AI to do.

Instead of simple commands like "create a task," they start issuing complex, multi-faceted requests: "Create a project for the mobile app redesign, add five priority tasks based on our last meeting notes, and navigate to the timeline view so I can adjust deadlines."

Why? Because visual confirmation makes complexity manageable. Users can see each part of the command executed in sequence, building confidence that the AI understands not just individual actions but orchestrated workflows.

The Future of Visual Confirmation

Where We're Heading

Visual confirmation is just the beginning. We're exploring how AI can use predictive visual changes—showing users what's about to happen before finalizing actions, creating a collaborative "yes/no" moment for complex operations.

Imagine saying "Reorganize these tasks by priority" and watching the interface preview the reorganization in real-time, allowing you to say "yes, perfect" or "no, group by deadline instead" before anything commits. The visual layer becomes not just confirmation but conversation.

We're also investigating how visual confirmation can work across devices—your voice command on mobile producing instant visual updates on your desktop, creating seamless multi-device workflows that feel like one unified system.

The Takeaway

If you're building voice AI or any AI-powered interface, visual confirmation isn't a nice-to-have feature—it's the foundational layer that makes AI adoption possible. Users will never fully trust what they cannot see, no matter how sophisticated your underlying technology.

The good news? Visual confirmation isn't technically complex. It's philosophically simple: show users their commands taking effect in real-time, with specificity and consistency. The hard part is committing to it as a non-negotiable principle from day one.

The question isn't whether to implement visual confirmation. The question is: how fast can you make it, how specific can you make it, and how consistently can you deliver it? Because in the race for AI adoption, trust is the only currency that matters—and visual confirmation is how you earn it.

Experience Visual Confirmation in Action

See how Peak's F.R.I.D.A.Y. combines voice intelligence with real-time visual feedback to create the most confident AI collaboration experience available today.

Peak — Where voice meets vision. Where AI meets confidence.

About the Author: Thomas Jackson is the founder and lead engineer of Peak, a voice-first operations platform powered by F.R.I.D.A.Y. AI. Peak pioneered visual confirmation patterns for voice-driven productivity tools and continues to push the boundaries of human-AI collaboration through sophisticated yet intuitive interface design.